Welcome to edition 04 of Connecting Dots. A slightly longer dispatch. Thanks for being part of the journey as I experiment with the format.

WESTMINSTER, UK - It’s a searing and saucy day to be in Britain’s House of Commons. I’m feeling relieved. Unusual when it comes to discussions on artificial intelligence (AI.) Normally I get the same bottom of my gut unease when people talk about AI as I did circa 2005-08 social media. Where intelligent sounding yet artificial point scoring ran the day.

I was relieved to join a Select Committee all party policy group panel of MPs, academia and industry sensibly discussing the opportunities and implications of AI. It was full of optimism with caution. Capturing the strengths and limits of our social contracts. As well golden doses of AI’s potential with pragmatism. Recognizing that we have existing legal frameworks and the precedents enabling us to address the unprecedented uses of AI that will continue to emerge. Unusually intelligent.

Yet why was this such an unusually intelligent discussion? Why are so many AI discussions consumed with tech utopia or dystopian rejection with seemingly little substance in between?

My working theory is that general technology topics like AI overwhelm our mental models. It triggers the vital defences of the most educated, comfortable and participatative “know it all” era ever. We have so many who are so good with analysis of other’s work and talking about innovation. So much so there is little time or space to actually innovate. A similar dilemma of people and organizations obsessed with drafting strategy yet rarely enacting them.

I’ve seen some great value creating AI powered services and have been a part of a few. I’ve also seen, felt in fact, a lot of angst, unease and superficial attempts. Might you have observed either of these patterns?

Action without thought

Thought without action

Both leave unrealized potential on the table. Ending in defeat as the intelligent, well intentioned and ambitious protagonists ultimately are consumed by their defence mechanisms. Let’s get clinical (zzzzz.... sorry) what defence mechanisms do we mean? Scanning the American Psychiatric Association’s catalogue of defence mechanisms these jumped out for commonly playing out in AI discussions.

Intellectualisation - avoidance and suppression of the emotional component of an event (e.g. skirting around implications of the technology or a solution)

Reaction Formation - Substitution of wishes or feelings opposite of the true feelings (e.g. claiming to want to change through AI but then not actually)

Splitting - unacceptable positive or negative qualities of self or others are suppressed (e.g. just going with the conversation ignoring the inevitable iceberg crash)

Rationalisation - Give socially acceptable explanations for behaviour (e.g. rehashing generic non-specific ideas or phraseology like “data is the new oil”)

We’re talking AI here but actually these mechanisms are present when any new ideas emerges. It protects existing world views and limits exposing oneself to risk. It’s why people celebrate the aphorism of fail fast, but would rather it’s actually someone else who does the failing while they succeed. Which ironically is a great way to fail long run 🤷♂️

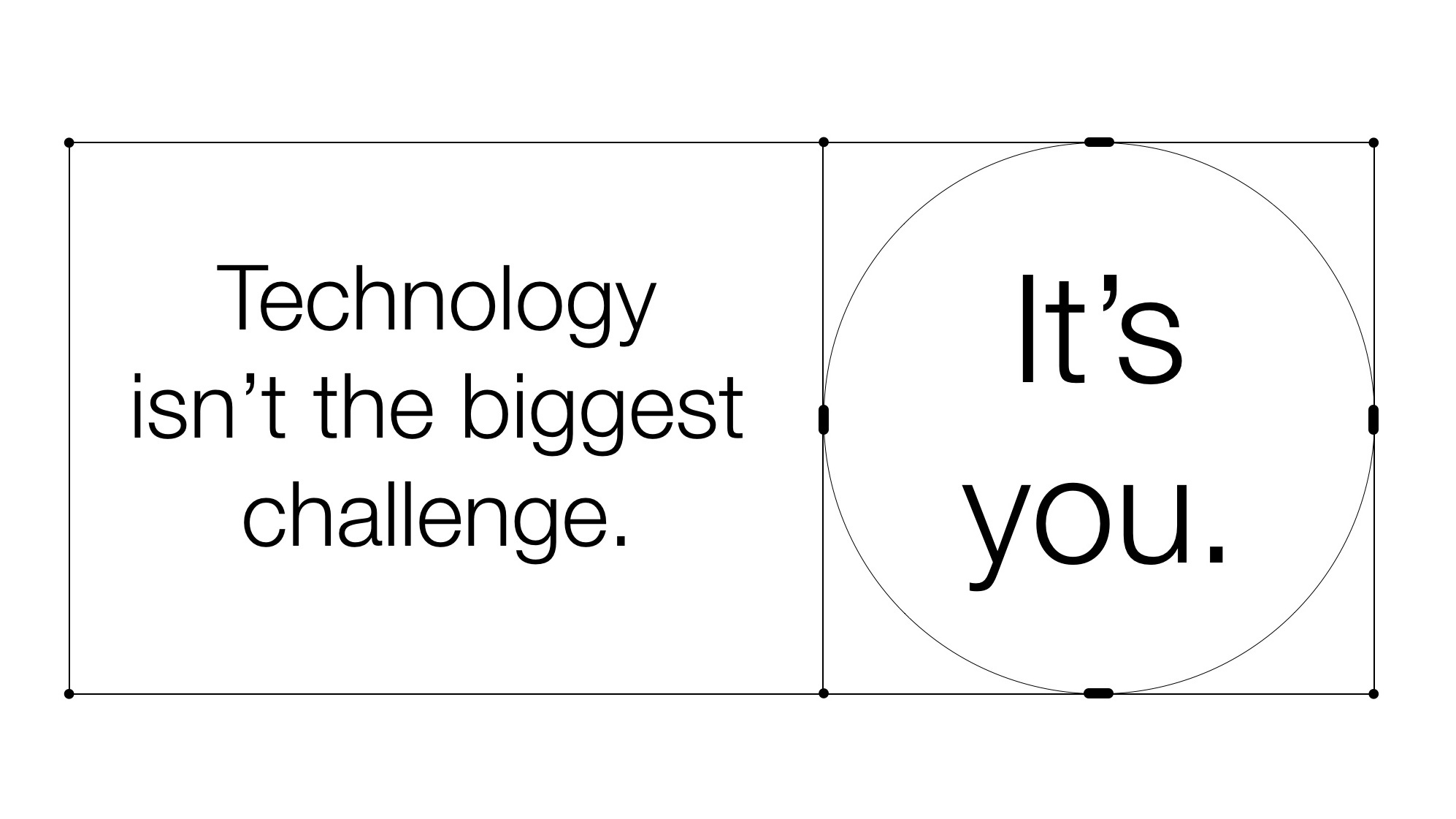

“Is technology the problem? Or the humans?”

Trial & Error

Connecting Dots is about making the complex simple and multi-disciplinary learning to help leaders innovate for the digital era. It’s an experiment, thanks for being part of the journey, feedback welcome.

Hungry for more?

MIT Media Lab Director John Ito - The Problem With Tech People Who Want to Solve Problems

Inspiration for the above opening image - Building the AI-Powered Organization

Another take on the Artificial Intelligence of Silicon Valley - WeWork and Uber’s Fight Against Reality

Movements

I’m in France at the moment. Trialing a “thesis writing” venue in Burgundy for next year. Much of next week is Fontainebleau/INSEAD then back to London for the rest of July. Say hi for ☕️

May you thrive,

Brett

PS. I’d be grateful if you might forward this newsletter to one, two or three friends and colleagues so we can grow the community. Click here to subscribe at Connecting Dots.

🙏🏻